Steven Mills - Research

This page details my current and recent research, which I've tried to organise into coherrent categories: ARSpectator; Culture & Heritage; Robotics; Learning & Recognition; 3D Reconstruction; and Interaction.

My contact details are to the right, but email is generally the best option. If you wish to make a meeting time, it's best to check my calendar first.

Selected publication are listed with each topic below, but for a more complete (and often up-to-date) list, see my Google Scholar page.

Contact Details

| Email: | steven@cs.otago.ac.nz |

|---|---|

| Phone: | ++64 3 479 8501 |

| Office: | Room 245, Owheo Building |

Augmented Sports Spectator Experience

Over the last couple of years, we have seen many major advancements in sports broadcasting as well as in the interactivity in sports entertainment. 25 years ago, the first real-time graphics animation of a sporting event was broadcast on television for the Americas Cup, driven by NZ innovation. Nowadays spectators can remotely follow the same event live in real-time using their mobile devices. However, spectators at live sporting events often miss out on this enriched content that is available to remote viewers through broadcast media or online.

The main idea of this project is to extend NZ’s lead in this field, visualising game statistics in a novel way on the mobile devices of on-site spectators to give them access to information about the sporting event. We will provide spectators with an enriched experience like the one you see in a television broadcast. Our plan is to use new technologies like Augmented Reality to place event statistic such as scoring, penalties, team statistics, additional player information into the field of view of the spectators based on their location within the venue. While currently we focus more on delivering data to the spectators, this approach could be easily extended for supporting coaches and team analysts. Our research will bring sports events closer to the audience, as well as bringing the spectators closer to the events and the teams.

Key Collaborators

Funding and Support

- MBIE Endeavour 2017 Smart Ideas, $1,000,000: Situated Visualisation to Enrich Sports Experience for On-Site Spectators

- Priming Partnerships 2017, $19,500: Development of a Video-Capture System for Sports Science Research at the Forsyth Barr Stadium

Digital Culture and Heritage

Computer vision finds application in a wide variety of areas, and I am particularly interested in heritage and cultural aplications. This has included working with David Green on an installation for the Art & Light exhibition as well as more traditional academic application of computer vision. The collaborative nature of this work appeals to me, as does the value that these areas have to society.

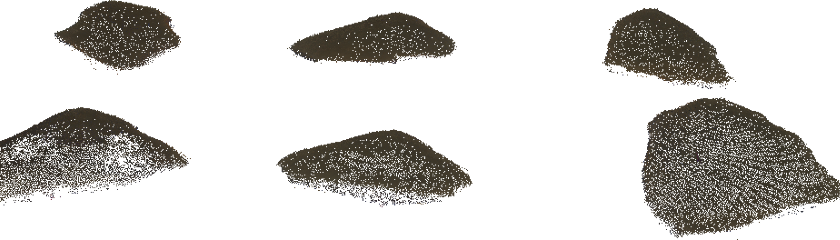

Working with archaeologists, I am investigating the manufacture of pre-European Māori stone tools. We are analysing the shape of incomplete tools, and the fragments left behind during manufacture. At this stage we are investigating ways to automate manual processes in the archaeological analysis of these artifacts, but in the longer term we hope to discover new insights into the toolmaking process.

I am also investigating the analysis of historic documents, in particular the Marsden Online Archive. Character recognition techniques that have been successful on printed text don't work on these documents. We are exploring whole-word rather than character-level recognition, drawing a parallel with recent advance in machine learning that have proven successful for object recognition.

Key Collaborators

Funding and Support

- UORG 2017 $36,000: Modelling and Analysis of Stone Tools from Images

Selected Publications and Outputs

- Lech Szymanski and Steven Mills, CNN for Historic Handwritten Document Search, Proc. Image and Vision Computing New Zealand (IVCNZ), 2017

- Hamza Bennani, Steven Mills, Richard Walter, and Karen Greig, Photogrammetric Debitage Analysis: Measuring Māori Toolmaking Evidence, Proc. Image and Vision Computing New Zealand (IVCNZ), 2017

- Steven Mills, David Green, Nancy Longnecker, James B. Brundell, Craig J. Rodger, and Peter Brook, Embodied Earth: Experiencing Natural Phenomena, Proc. Image and Vision Computing New Zealand (IVCNZ), 2016 [10.1109/IVCNZ.2016.7804425]

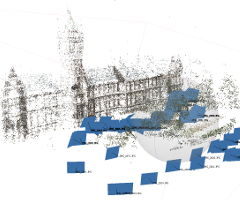

3D Reconstruction from Images

3D reconstruction pipelines take collections of images and produce 3D models of the world. While this task has become almost routine, there are many steps in the pipeline, and many areas that can still be explored and improved. The models produced find a wide variety of applications, including augmented reality, digital culture and heritage, medical imaging and surgery, and robotics

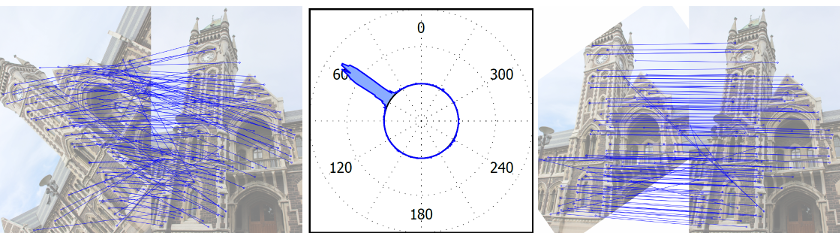

One of the main areas I am exploring is the exploitation of feature scale and orientation in this context. Most structure-from-motion pipelines are based on point features, but the most successful feature detectors (such as SIFT and ORB) estimate the size and orientation of the features as well as their location. This additional information is often discarded, but can be used to identify unreliable feature correspondences between images, or to accelerate RANSAC-based camera pose estimation.

Another area of interest is exploiting parallel computing to handle large data sets for vision processing. 3D reconstruction methods scale up to several thousand images, but larger scenes are often processed in parts. Even on smaller data sets, there is a lot of processing to be done, and exploiting parallel hardware can make significant speed increases.

Key Collaborators

Funding and Support

- UORG 2014, $18,500, 3D Reconstruction from Endoscopic Images in Surgery

- Priming Partnerships 2013 $31,000, Scalable Automated and 3D Reconstruction from Images

- UORG 2013, $25,000: 3D Motion from Video using a Model of the Otago Fishing Spider

Selected Publications and Outputs

- Huan Feng, David Eyers, Steven Mills, Yongwei Wu, and Zhiyi Huang, Principal Component Analysis Based filtering for Scalable, High Precision k-NN Search, IEEE Transactions on Computing,, 2017 [10.1109/TC.2017.2748131]

- Nabeel Khan, Brendan McCane, and Steven Mills, Better than SIFT?, Machine Vision and Applications, 26(6), 819-836, 2015 [10.1007/s00138-015-0689-7]

- Reuben Johnson, Lech Szymanski, and Steven Mills, Hierarchical Structure from Motion Optical Flow Algorithms to Harvest Three-Dimensional Features from Two-Dimensional Neuro-Endoscopic Images, Journal of Clinical Neuroscience, 22(2), 378-382, 2015 [10.1016/j.jocn.2014.08.004]

- Steven Mills, Accelerated Relative Camera Pose from Oriented Features, Proc. Int. Conference on 3D Vision (3DV), 416-424, 2015 [10.1109/3DV.2015.54]

- Steven Mills, Relative Orientation and Scale for Improved Feature Matching, Proc. Int. Conference on Image Processing (ICIP), 3484-3488, 2013 [10.1109/ICIP.2013.6738719]

Learning and Recognition

Vision is one of the main ways we learn about the world, so tasks of learning and recognition are another interest of mine. Deep networks have become dominant in this field, and I am interested in applying them, but also in learning more about how they work. Applications we have explored include medical segmentation and document analysis, and we've also examined more closely the assertion that these networks work the same way as the human visual system.

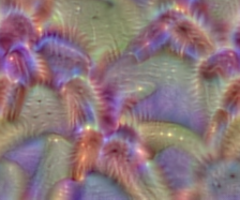

Deep learning approaches are not, however, always applicable. Sometimes more analytic techniques such as PCA or manifold learning can be applied, and in other cases there is insufficient training data for current convolutional network models. An example of the latter is fine-grained recognition - telling the difference between similar sub-classes of objects. In the picture above, for example, the middle image is of a Kea, as is one of the others. The other image is a Kaka, but telling these species apart is much more difficult than distinguishing a bird from a cat.

Key Collaborators

- Brendan McCane

- Lech Szymanski

- Tapabrata Chakraborti

- Rassoul Mesbah

Selected Publications and Outputs

- Tapabrata Chakraborti, Brendan McCane, Steven Mills, and Umapada Pal, A Generalised Formulation for Collaborative Representation of Image Patches (GP-CRC), Proc. British Machine Vision Conference (BMVC), 2017 [Conference Proceedings]

- Russel Mesbah, Brendan McCane, Steven Mills, and Anthony Robins, Improving Spatial Context in CNNs for Semantic Medical Image Segmentation, Proc. Asian Conference on Pattern Recognition (ACPR), 2017

- Xiping Fu, Brendan McCane, Steven Mills, and Michael Albert, NOKmeans: Non-Orthogonal K-means Hashing, Proc. Asian Conference on Computer Vision (ACCV), 162-177, 2014 [10.1007/978-3-319-16865-4_11]

Robotics and Sensing

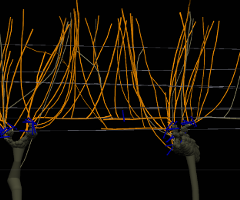

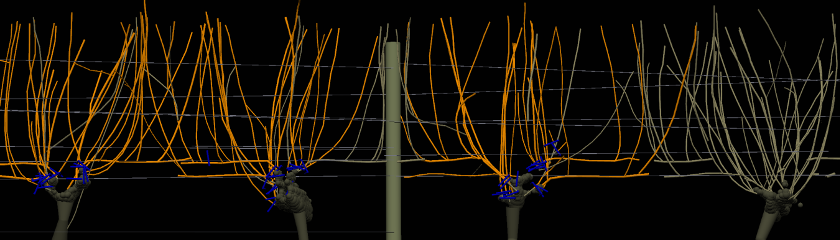

Robots rely on sensors to build models of the world around them, and vision is a rich and adaptable source of such information. Robotics impose additional constraints, such as real-time processing and the need for robust models of the world, on vision tasks. I was involved as a researcher on the recently-completed MSI/MBIE funded Vision-Based Automated Pruning project, led by Richard Green at the University of Canterbury. We worked with Tom Botterill to develop vision systems to build 3D models of grape vines and direct a robotic pruning arm to remove unwanted canes.

I am also involved in a recently-started project, Karetao Hangarau-a-Mahi: Adaptive Learning Robots to Complement the Human Workforce, led by Armin Werner (Lincoln Agritech), Will Browne (Victoria University), and Johan Potgieter (Massey University). The projecct aims to develop robots that can safely and effecively work alongside people. I am part of a national team of researchers developing new and robust ways to sense and model dynamic environments for this challenge.

Key Collaborators

Funding and Support

- Science for Technological Innovation 2017 $2,000,000: Karetao Hangarau-a-Mahi: Adaptive Learning Robots to Complement the Human Workforce

Publications and Outputs

- Tom Botterill, Scott Paulin, Richard Green, Samuel Williams, Jessica Lin, Valerie Saxton, Steven Mills, XiaoQui Chen, and Sam Corbett-Davies, A Robot System for Pruning Grape Vines, Journal of Field Robotics, 32(6), 1100-1122, 2017 [10.1002/rob.21680]

- Adrien Julé, Brendan McCane, Alistair Knott, and Steven Mills, Discriminative Touch from Pressure Sensors, Proc. Int. Conference on Automation, Robotics, and Applications (ICARA), 279-282, 2015 [10.1109/ICARA.2015.7081160]

- Tom Botterill, Steven Mills, and Richard Green, Bag-of-Words Driven Single Camera SLAM, Journal of Field Robotics, 28(2), 204-226, 2011 [10.1002/rob.20368]

Interaction

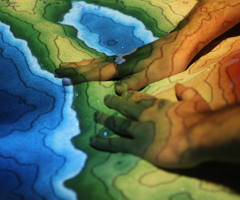

As well as the ARSpectator project, I am interested in other forms of interaction - generally when cameras are used to track or interpret people's interactions. One of the main things I'm interested in is our AR Sandbox, which is based on an open-source project at UC Davis. We have used this for teaching in Surveying, and for various outreach activities, but are interested in its potential in other areas as an intuitive, tangible, and responsive interface.

Other interaction related work that I've been involved with includes tracking faces for interactive displays; identifying power lines for interaction with a mobile app; interactive art installations; and navigation tools for the blind.

Key Collaborators

- Holger Regenbrecht

- Tony Moore

- Simon McCallum

- Lewis Baker

- Maria Mikhisor

Funding and Support

- CALT 2016 $20,000: A Sand-Based Tangible User Interface for Geospatial Teaching

Publications and Outputs

- Lewis Baker, Steven Mills, Tobias Langlotz, and Carl Rathbone, Power Line Detection using Hough transform and Line Tracing Techniques, Proc. Image and Vision Computing New Zealand (IVCNZ), 2016 [10.1109/IVCNZ.2016.7804438]

- Antoni Moore, Ben Daniel, Greg Leonard, Holger Regenbrecht, Judy Rodda, Lewis Baker, Riki Ryan, and Steven Mills, Preliminary Usability Study of an Augmented Reality Sand-Based 3D Terrain Interface, Proc. Nat. Cartographic Conference, GeoCart, 2016

- Maria Mikhisor, Geoff Wyvill, Brendan McCane, and Steven Mills, Adapting Generic Trackers for Tracking Faces, Proc. Image and Vision Computing New Zealand (IVCNZ), 2015 [10.1109/IVCNZ.2015.7761570]