Home | History | Research | Publications

Steven Mills - Research

Here you can find out a bit more about my current research projects, as well as those I worked on while at Areograph and the Geospatial Research Centre.

My main research area is computer vision, and I am a member of the Graphics and Vision research group. Within computer vision, my main focus recovering 3D structure from multiple images. This is an area that is receiving a lot of attention at the moment, and I am interested in using prior knowledge to improve reconstruction quality. This prior knowledge can come from many sources and can be precise and metric (such as lens calibration information or GPS camera locations), or vague and qualitative (such as knowledge that subsequent images usually view similar parts of the scene). As well as the 3D structure recovery problem, I am interested in computer vision generally, and in related fields such as image processing and computer graphics.

Current Research

Vision Based Automated Pruning: I am part of a team led by Dr Richard Green at the University of Canterbury developing a vine-pruning robot for the wine industry. The robot will use computer vision to build a 3D model of the vines. This model will then be used by an artificial intelligence system to guide a robotic pruning arm to cut away unwanted parts of the vine. This project is supported through partnership with industry and government funding from MSI.

Mosaicing Aerial Images Aerial images can be orthorectified by projecting them on to a 3D terrain model. This removes distortions caused by the shape of the terrain and makes a flat, map-like orthoimage. Multiple orthoimages can then be stitched together to make a large orthomosaic image. I am working with Dr Phil McLeod at Areograph to find optimal seams along which to join orthoimages in such a mosaic.

3D Models from Images Much of my recent work in 3D reconstruction from images has been in creating terrain models from aerial images. However, I am interested in modelling other objects. Currently I am working with Dr Natalie Smith to build 3D models of garments for heritage and educational applications, and I am also interested in modelling buildings and architectural spaces.

Direct 3D Sensing While processing images are one way to recover 3D scene structure, other sensors can estimate 3D shape directly. One that is of interest is Microsoft's Kinect sensor, which is cheap and useful up to a range of several meters. I am working with honours students on Kinect-based projects to build 3D models and to construct a 3D tangible interface.

Past Research

Most of my recent research has been commercially focused. Below are some examples of projects that I have been involved with, along with results and applications to real world problems.

Areograph

Photo-realistic Environments:

At Areograph I was extensively involved in developing a system for creating photo-realistic environments from images. This involves processing a large number of still images to create a 3D model of a scene. The final scene reconstruction is then dynamically textured with the original images. The use of dynamic texturing means that fine details and complex lighting effects are automatically captured and displayed to the viewer.

A demonstration of the Areograph process and some results.

Areograph used in a digital heritage application at the Otago Early Settlers Museum.

Example of a scene generated from Hawkeye UAV imagery in a GIS package.

Geospatial Research Centre

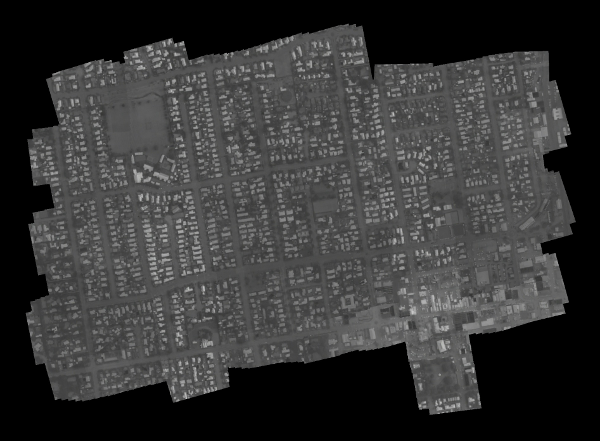

Thermal Mapping: This project created large-scale thermal maps of urban areas. A low resolution (320x240 pixel) thermal camera was used to capture images from a light aircraft. These were then geo-referenced by projecting from known camera positions (from GPS and inertial sensors) onto a LiDAR terrain model. From this data a mosaic image of a city can be built up.

Apart from some initial calibration, the image processing was fully automated. This included selecting images for mosaicing, reprojection onto the terrain model, and adjusting for brightness changes between images. The final system was used to mosaic around 250,000 images to create a 1m resolution image of Christchurch city (over 400 km2). You can see some results at the Christchurch City Council website.

Results from a test of the thermal mosaicing system over Rangiora (click for a larger version).