Home | News | Research | Publications | Teaching

Stefanie Zollmann - Research

Situated Visualization | Context-sensitive Interfaces | Projector-Camera Systems

VISGIS: Dynamic Situated Visualization for Geographic Information Systems

Situated Visualization of data from geographic information systems (GIS) is exposed to a set of problems, such as limited visibility, legibility, information clutter and the limited understanding of spatial relationships.

In this work, we address the challenges of visibility, information clutter and understanding of spatial relationships with a set of dynamic Situated Visualization techniques that address the special needs of Situated Visualization of GIS data in particular for street-view-like perspectives as used for many navigation applications. The proposed techniques use GIS data as input for providing dynamic annotation placement, dynamic label alignment and occlusion culling.

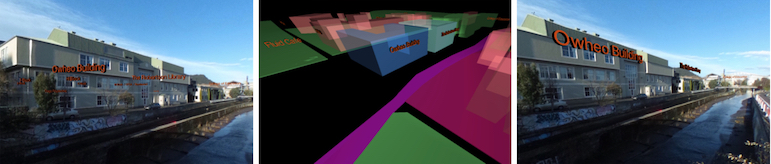

Dynamic label alignment: (Left) For unaligned labels it is often difficult to understand their spatial relationship the to real world objects. (Middle) Overview of the alignment along the building outlines in 3D. (Right) Alignment supports the understanding of the spatial relationship. Similar orientations support visual grouping.

Stefanie Zollmann, Christian Poglitsch, Jonathan Ventura, VISGIS: Dynamic Situated Visualization for Geographic Information Systems, IVCNZ, 2016.

Augmented Reality for Construction Site Monitoring and Documentation

Augmented Reality allows for an on-site presentation of information that is registered to the physical environment. Applications from civil engineering, which require users to process complex information, are among those which can benefit particularly highly from such a presentation. Within this work, we describe how to use Augmented Reality (AR) to support monitoring and documentation of construction site progress. For these tasks, the staff responsible usually requires fast and comprehensible access to progress information to enable comparison to the as-built status as well as to as-planned data. Instead of tediously searching and mapping related information to the actual construction site environment, our AR system allows for the access of information right where it is needed.

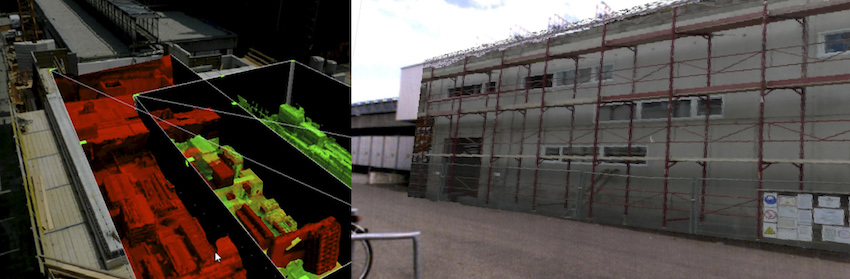

Visualization of 4D datasets. Left: Situated Visualization of two 3D datasets registered to aerial imagery using magic lens interface. Right: On-site Augmented Reality visualization of simplified 3D mesh representing a previous state of the construction site.

Stefanie Zollmann, Christof Hoppe, Stefan Kluckner, Christian Poglitsch, Horst Bischof, Gerhard Reitmayr, Augmented Reality for Construction Site Monitoring and Documentation, Proceedings of the IEEE, 102(2):137--154, 2014.

FlyAR: Augmented Reality Supported Micro Aerial Vehicle Navigation

Micro aerial vehicles equipped with high-resolution cameras can be used to create aerial reconstructions of an area of interest. In that context automatic flight path planning and autonomous flying is often applied but cannot fully replace the human in the loop, supervising the flight on-site to assure that there are no collisions with obstacles. Unfortunately, this workflow yields several issues, such as the need to transfer the aerial vehicle's position between 2D map positions and the physical environment, and the complicated depth perception of objects flying in the distance. Augmented Reality can address these issues by bringing the flight planning process on-site and visualizing the spatial relationship between the planned or current positions of the vehicle and the physical environment. In this paper, we present Augmented Reality supported navigation and flight planning of micro aerial vehicles by augmenting the user's view with relevant information for flight planning and live feedback for flight supervision. Furthermore, we introduce additional depth hints supporting the user in understanding the spatial relationship of virtual waypoints in the physical world and investigate the effect of these visualization techniques on the spatial understanding.

Augmented Reality supported flight management for aerial reconstruction. (Left) Aerial reconstruction of a building. (Middle) The depth estimation for a hovering MAV in the distance is complicated due to missing depth cues. (Right) Augmented Reality provides additional graphical cues for understanding the position of the vehicle.

Stefanie Zollmann, Christof Hoppe, Tobias Langlotz, Gerhard Reitmayr, Flyar: Augmented Reality Supported Micro Aerial Vehicle Navigation, IEEE Transactions on Visualization and Computer Graphics (TVCG), March 2014.

PDF(preprint) Bib (bibtex) YouTube (Video) Vimeo (Presentation VR)

Image-based X-ray visualization techniques for spatial understanding in outdoor augmented reality

Within this work, we evaluated different state-of-the-art approaches for implementing an X-ray view in Augmented Reality (AR). Our focus is on approaches supporting a better scene understanding and in particular a better sense of depth order between physical objects and digital objects. One of the main goals of this work is to provide effective X-ray visualization techniques that work in unprepared outdoor environments. In order to achieve this goal, we focused on methods that automatically extract depth cues from video images. The extracted depth cues are combined in ghosting maps that are used to assign each video image pixel a trans- parency value to control the overlay in the AR view. Within our study, we analyze three different types of ghosting maps, 1) alpha-blending which uses a uniform alpha value within the ghosting map, 2) edge-based ghosting which is based on edge extraction and 3) image-based ghosting which incorpo- rates perceptual grouping, saliency information, edges and texture details. Our study results demonstrate that the lat- ter technique helps the user to understand the subsurface location of virtual objects better than using alpha-blending or the edge-based ghosting.

Augmented Reality visualizations using different approaches of extracting depth cues from a video image. (Left) Random occlusion cues randomly preserve image information but can not transport the depth order. (Middle) Only edges are preserved to provide depth cues. (Right) Using important image regions based on a saliency computation as depth cues creates the impression of subsurface objects.

Stefanie Zollmann, Raphael Grasset, Gerhard Reitmayr, and Tobias Langlotz, Image-based X-ray visualization techniques for spatial understanding in outdoor augmented reality, ACM OzCHI 2014, 2014

Adaptive Ghosted Views for Augmented Reality

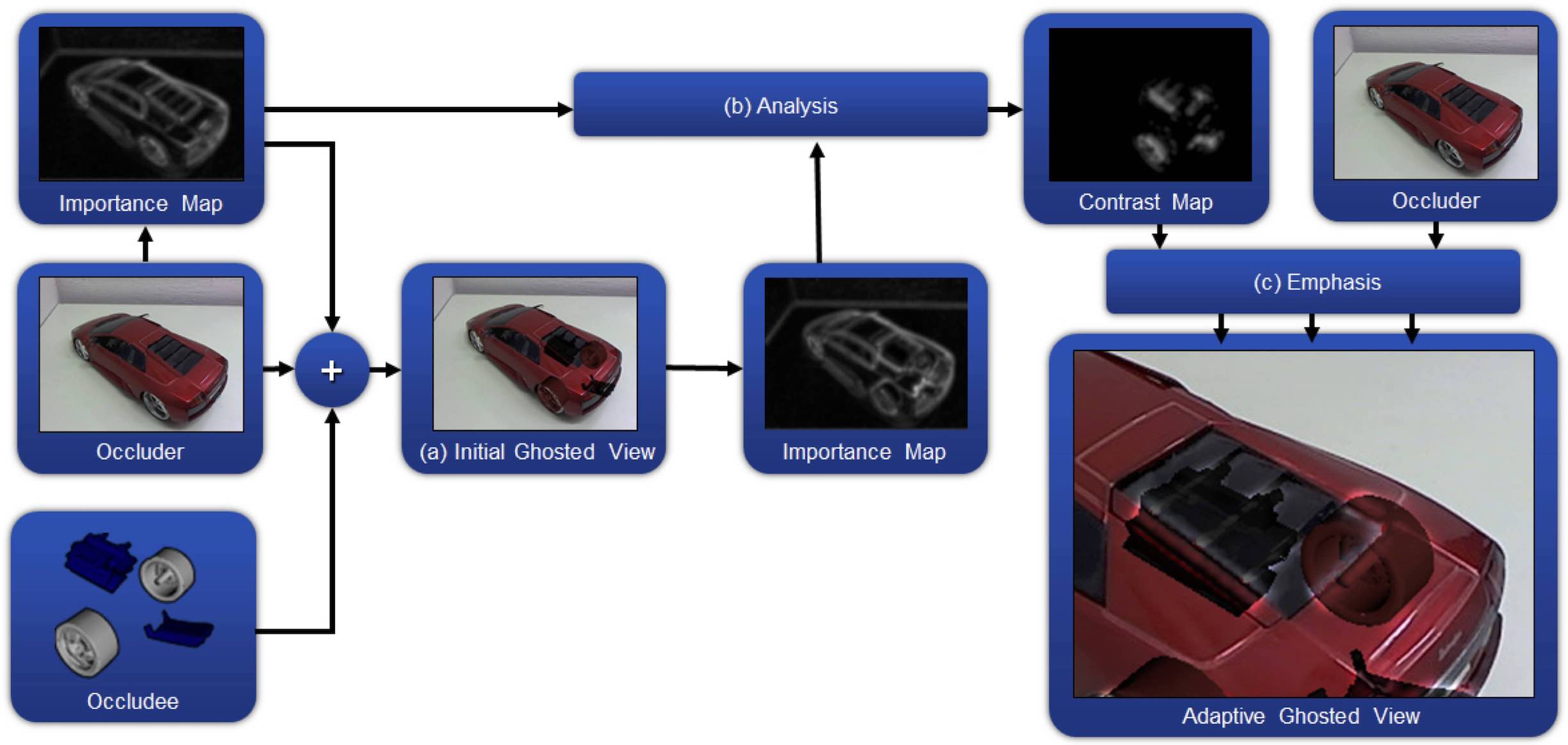

In Augmented Reality (AR), ghosted views allow a viewer to explore hidden structure within the real-world environment. A body of previous work has explored which features are suitable to sup- port the structural interplay between occluding and occluded ele- ments. However, the dynamics of AR environments pose serious challenges to the presentation of ghosted views. While a model of the real world may help determine distinctive structural features, changes in appearance or illumination detriment the composition of occluding and occluded structure. In this paper, we present an ap- proach that considers the information value of the scene before and after generating the ghosted view. Hereby, a contrast adjustment of preserved occluding features is calculated, which adaptively varies their visual saliency within the ghosted view visualization. This al- lows us to not only preserve important features, but to also support their prominence after revealing occluded structure, thus achieving a positive effect on the perception of ghosted views.

Method overview of adaptive ghosted views. (a) Occluder and occlude are combined to a ghosted view view by using state of the art transparency mapping of the importance map of the occluder. The resulting ghosted view is missing important features. Therefore, another importance map is calculated on the resulting ghosted view. (b) This importance map is compared to the importance map of the occluder. Elements that were identified to be important for the occluder, but are no longer detected as important in the ghosted view will be emphasized. (c) After applying the emphasis on the adaptive ghost, important structures are again clearly visible.

Denis Kalkofen, Eduardo Veas, Stefanie Zollmann, Steinberger Markus, and Schmalstieg Dieter, Adaptive Ghosted Views for Augmented Reality, International Symposium on Mixed and Augmented Reality (ISMAR 2013), 2013,

Towards Pervasive Augmented Reality: Context-Awareness in Augmented Reality

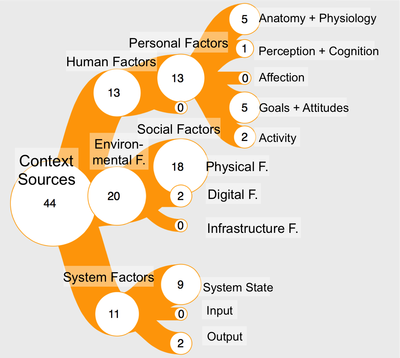

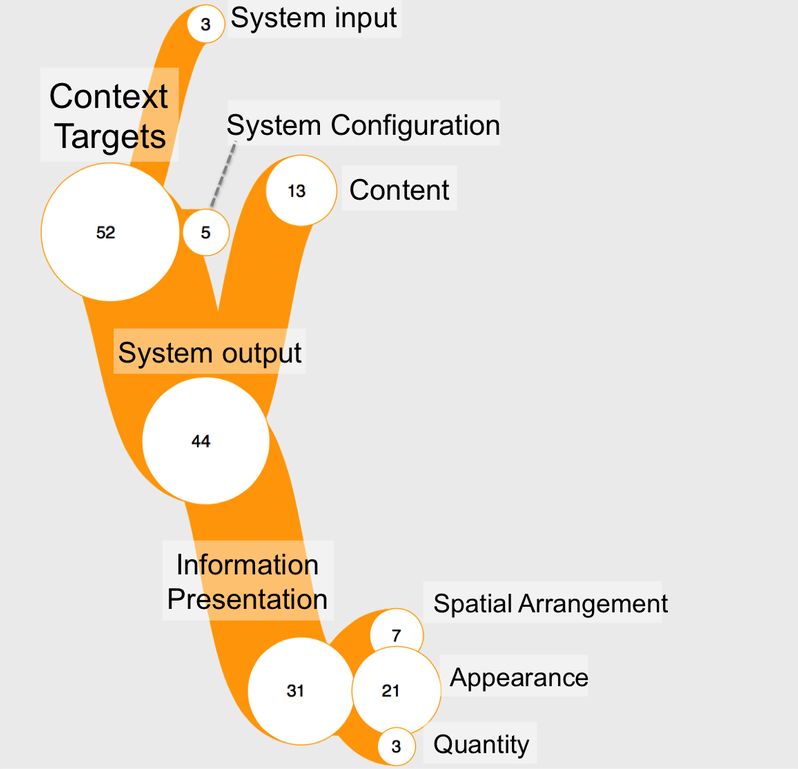

Augmented Reality is a technique that enables users to interact with their physical environment through the overlay of digital information. While being researched for decades, more recently, Augmented Reality moved out of the research labs and into the field. While most of the applications are used sporadically and for one particular task only, current and future scenarios will provide a continuous and multi-purpose user experience. Therefore, we present the concept of Pervasive Augmented Reality, aiming to provide such an experience by sensing the user's current context and adapting the AR system based on the changing requirements and constraints. We present a taxonomy for Pervasive Augmented Reality and context-aware Augmented Reality, which classifies context sources and context targets relevant for implementing such a context-aware, continuous Augmented Reality experience. We further summarize existing approaches that contribute towards Pervasive Augmented Reality. Based our taxonomy and survey, we identify challenges for future research directions in Pervasive Augmented Reality.

Interactive visualizations for context sources (Left) and context targets (Right). Access the interactive version by clicking on the images.

Jens Grubert, Tobias Langlotz, Stefanie Zollmann, Holger Regenbrecht,Towards Pervasive Augmented Reality: Context-Awareness in Augmented Reality, IEEE Transactions on Visualization and Computer Graphics (IEEE TVCG)

Pdf (Preprint) Bib Interactive Visualization

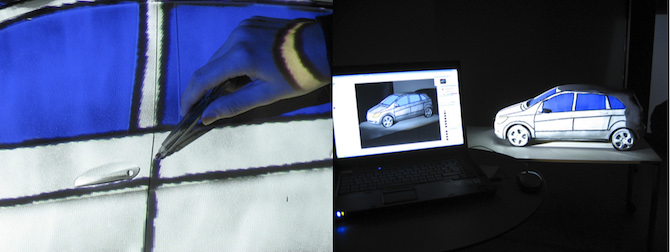

Spatially Augmented Tape Drawing

Tape drawings are an important part of the form finding process in the automotive industry and thus for creating the final design and shape of cars during the product development process. Up to now this step is done on white boards in 2D and on clay models. In this poster we present a system that supports designers during the tape drawing process by transferring drawings created in 2D to the clay model by using projector-based spatial augmented reality. Further- more we show an optional 3D input method for creating tape draw- ings directly on the clay model that additionally allows the trans- mission of information into a 2D representation using a registered projector-camera system. This system guarantees the consistency of information in different media and dimensions during the design process.

Spatially Augmented Tape Drawings. Left: Creating tape drawings with an IR-LED pen on a clay model. Right: Tape drawings and additional accentuations, like blue windows, are made in Photoshop and simultaneously projected onto the clay model ((b) right). (c) Creating tape drawings with an IR-LED pen on a clay model.

Stefanie Zollmann, and Tobias Langlotz, Spatially Augmented Tape Drawing, IEEE Symposium on 3D User Interfaces 2009 (3DUI) (Poster)

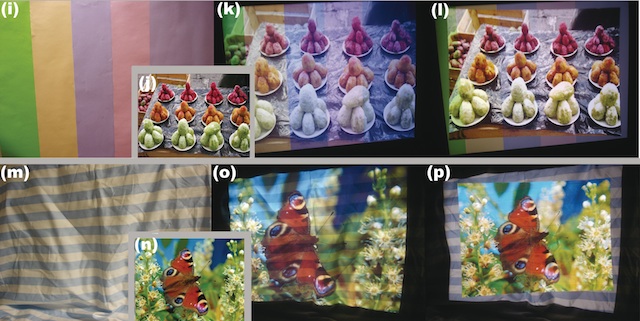

Imperceptible Calibration for Radiometric Compensation

In this work, we developed an imperceptible geometry and radiometry calibration of projector-camera systems. Our approach can be used to display geometry and color corrected images on non-optimized surfaces at interactive rates while simultaneously performing a series of invisible structured light projections during runtime. It supports disjoint projector-camera configurations, fast and progressive improvements, as well as real-time correction rates of arbitrary graphical content. The calibration is automatically triggered when mis-registrations between camera, projector and surface are detected.

Sample results for different surfaces and multiple images: Original surfaces (i,m). Without compensation (k,o). Corrected projection using our technique (l,p).

Stefanie Zollmann, and Oliver Bimber, Imperceptible Calibration for Radiometric Compensation, In Proceedings of EUROGRAPHICS 2007, pp. 61-64, 2007